How I used my school's HPC to train my own Deep Neural Network

Almost a year ago, I dove head-first into Deep Learning. When I explored the potential of this field and discovered how it can take the tech world by storm, I began digging deeper and eventually became certified in a Deep Learning framework by Google, TensorFlow.

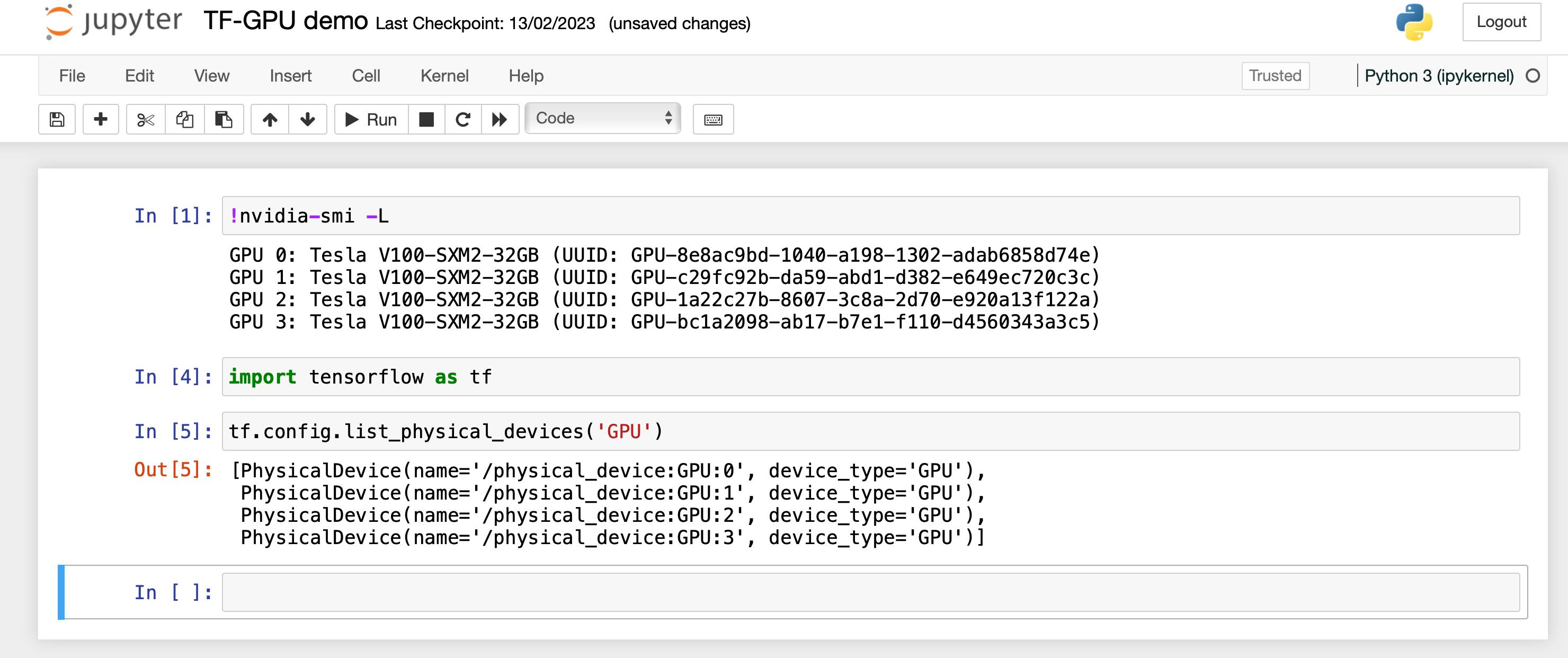

My worlds met when there was an intersection between powerful hardware and training Deep Learning models (Bringing NVIDIA and TensorFlow together). It was not easy to find sufficient computational power to train my AI models to increase efficiency.

However, I became hopeful when I discovered what a High-Performance Computing (HPC) machine is. What's even better? There was one on my college campus called The Foundry.

It is essentially meant for researchers and postdocs who need to focus more on analysing their experiments than waiting for them to finish running. A critical-mission task such as an approaching deadline for a research paper or the conditions set by advisors, etc.

As a student belonging to the same college, I was able to get access to our HPC for free. I was very excited to see how I can train my Convolutional Neural Networks (CNN) on a cluster of GPUs readily available whenever I needed them.

What I failed to understand was that HPCs have very different environments compared to our traditional computing resources such as some cloud resources. This was a major setback for me because I was unable to launch a Jupyter Notebook on my cluster and build my models interactively.

I always prefer developing my AI models on an Interactive Python Notebook because it gives me the flexibility of trial-and-error, and enables me to visualize data before I feed it to my model for training. This led me to completely ditch this idea and instead made me rely on some other free/semi-free resources offered by cloud services such as GCP, AWS and Kaggle.

This approach was convenient and even helped me become a certified TensorFlow developer.

Now, I finally found a solution to the problem I lost hope on almost over a year ago, SSH Tunneling.

It may not sound like the solution you're expecting, but when I need to use a GPU cluster, I am first welcomed by the login node and this node does not have access to GPU clusters. So I then begin an interactive session using SLURM to retrieve access to a GPU environment. Once I get there, all I need to do is launch a Jupyter Notebook and I can begin developing my AI models by copy-pasting its endpoint URL in my browser. This didn't work and that's why I gave up on it.

What I should've done differently was essentially SSH into my HPC using port forwarding and then start a CUDA-enabled instance (Same as a GPU instance). Then on a new Terminal tab, I first SSH into my login node and then SSH into my CUDA-enabled session.

Finally, I launch a Jupyter Notebook following the same steps as before and it opens up as expected when I copy-paste the URL into my browser.

A straightforward solution to a problem I gave up on a long time ago was shown to me by a good friend that I meet during the ACM Data meetings that I conduct on campus every week. Very grateful to him for helping me save money on computation!

I would make a tutorial but it is very specific to my campus' HPC and would not work as is for you (Given you have access to an HPC in the first place).